11.3.2: Community based action research

Edwin Blake, William Tucker, Meryl Glaser and Adinda Freudenthal

Community based action research (2004-2007)

SIMBA v1

This cycle continued the firming up of the Softbridge abstraction, and instigated a completely different reference implementation. SIMBA v1 provided bi-directional Deaf-to-hearing communication to the PSTN with a human relay operator. This cycle produced the first full-scale engagement with the Deaf community at the Bastion of the Deaf in Newlands. The DCCT NGO abetted the intervention. We visited the Bastion twice weekly where SIMBA was tested with a number of Deaf people who participated in a PC literacy course. The table below provides an overview.

| Cycle overview | Description |

Timeframe |

Mid 2004 - mid 2005 |

Community |

DCCT members |

Local champion |

Stephen Lombard (DCCT) |

Intermediary |

Meryl Glaser (UCT), DCCT staff |

Prototype |

SIMBA v1 |

Coded by |

Sun Tao (UWC) |

Supervised by |

William Tucker (UCT/UWC) |

Technical details |

(Blake & Tucker, 2004; Glaser & Tucker, 2004; Glaser et al., 2004, 2005; Sun & Tucker, 2004; Tucker, 2004; Tucker et al., 2004) |

SIMBA v1 cycle overview

Diagnosis

Several concerns emerged from the initial Softbridge trial. Firstly, we had to trial the technology with more users. However, Deaf users from the DCCT community would struggle with both PC and text literacy, causing problems for both Deaf and hearing users. Secondly, ASR was not adequate for generalised recognition. Lastly, we needed to put more students onto the project to avoid relying on only one programmer.Plan Action

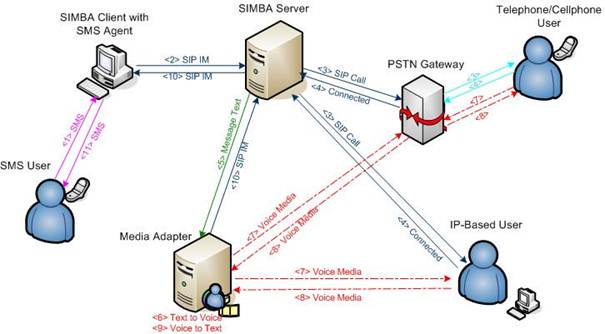

To begin to address these issues, we planned a small PC lab for the Bastion with Internet connectivity. We also needed a PC literacy course specifically dedicated to the target Deaf community so they could learn to use those computers. We also assigned parts of the project to several more students, one of who came up with the next prototype architecture shown below. SIMBA v1 architecture

SIMBA v1 architecture

SIMBA v1 was similar in design to the previous prototype, but had several key differences. SIMBA had tightly coupled web-services for TTS and ASR, replaced ASR with a human relay operator (though still wrapped as a web service), provided IM with SIP instead of Jabber and implemented VoIP with SIP instead of H.323. SIMBA was intended from the start to breakout to the PSTN.

Implement Action

In mid-2004, Glaser et al. (2005) trained 20 Deaf people on basic PC literacy skills at the Bastion using the newly installed computer lab equipped with ADSL broadband connectivity. The course prepared participants for the trial of the next text relay prototype (Glaser et al., 2004). We spent two afternoons a week helping them get accustomed to typing, email and Internet. We also hired a Deaf assistant (a part-time DCCT staff member) to work with Deaf users, and an interpreter to help us interview the Deaf participants.The SIMBA prototype enabled communication between a Deaf person using an IM client on a PC situated at the Bastion and a hearing person using a phone or cell phone. SIMBA had several significant differences from the prior Softbridge prototype. SIMBA bridged asynchronous IM with real-time voice with both VoIP and the PSTN. The Softbridge layers are shown below.

SIMBA v1 Softbridge stack

SIMBA v1 provided the next reference implementation of the Softbridge abstraction. Several modifications were based on previous experience. A human relay operator replaced the ASR with an interface wrapped as a web service so it could be easily replaced at a later date. SIMBA also bridged asynchronous IM text to fully synchronous VoIP with SIP and a SIP gateway to the PSTN. Hearing users could use a variety of devices: telephone, cell phone and PC-based soft phone.

Evaluate Action

Between the PC literacy class in July 2004 and the introduction of SIMBA in December, an average of six Deaf people came every Wednesday, and five people every Thursday to the PC lab at the Bastion. We had installed five PCs with video cameras. When we were not there, the PCs were covered with a cloth. This indicated that DCCT staff wanted to take care of the machines. However, no one would use them while they were covered. Demonstrating more dedication to the project, DCCT built a dividing wall in the office to create a distinct PC lab within their office space.In 2005, we hired one of the PC literacy class participants (a part-time DCCT staff member) to keep the lab open during the day four days per week. Attendance averaged three people per day. We still visited twice weekly for two-hour research sessions, but the attendance during this cycle dropped off to as low as two people per session. We began exposing Deaf participants to SIMBA during these sessions.

We asked all potential SIMBA users, Deaf and hearing, to sign research consent forms. This was a long and drawn out process. Deaf people signed fifteen consent forms, and hearing people completed fourteen. Before initiating a SIMBA call, we would call the hearing recipient to inform them what was happening. Many of the initial SIMBA calls were hindered by the fact that the hearing people called were working at the time or the system malfunctioned or crashed.

We instrumented SIMBA to collect usage statistics and record conversation transcripts. We also added instrumentation to measure the delays in the various stages of the Deaf-to-hearing communication process. However, so few calls were made, the usage statistics were not useful. The only successful SIMBA calls were made between one of the DCCT staff and a hearing social worker assigned to DCCT. One of those calls was 45 minutes long, but most of them were much shorter. SIMBA transcripts were used to determine that hearing people could indeed understand the voice synthesised from Deaf text despite poor spelling and grammar (Zulu and Le Roux, 2005).

Reflection/Diagnosis

Engagement with more Deaf participants during the cycle led to the recognition of several significant challenges. Most importantly, both textual and PC literacy was evidently lacking. We began to address these deficiencies with training and follow-up sessions (Glaser et al., 2004; Glaser et al., 2005), yet both text and the PCs continued to intimidate the Deaf users. Typing skills were so poor that the scheduled research sessions consisted mostly of practice with a typing tutor. During the weekly visits in 2004, we observed that another reason Deaf participants were not using Internet-based communication tools was that they had no one to email with, and certainly no one to IM with as their friends and relatives did not have access to ICT at home or at work.A number of technical issues arose from the SIMBA prototype. This was to be expected because it was the first used outside of the laboratory. There were niggling user interface issues such as faulty scrolling and presence that were easily fixed. More serious problems involved continuous rebooting of the system components in order to get the system ready to make a call.

The research protocol also hindered usage of SIMBA because we insisted that all users, Deaf and hearing, sign consent forms. This proved difficult because we had to rely on the Deaf participants to deliver and return the signed consent forms from their hearing co-participants. They often did not understand that we wanted them to do this. In the end, we dropped the requirement for hearing participants since we were most interested in building solutions for Deaf people. We were also not recording the hearing user's voice, although we did record the relayed text. It was telling that the most successful SIMBA experiences were performed at the DCCT premises between two staff members (one Deaf, one hearing).

SIMBA v2

We hired the lead student from the previous cycle, Sun Tao, to code for the project full-time after he finished his MSc. His first task was to fix bugs and to provide awareness features for both Deaf and hearing users. This resulted in an innovative audio isTyping awareness feature that let the hearing user 'hear' when the Deaf user was typing. Another MSc student wrapped SIMBA with a guaranteed delivery framework for both synchronous and asynchronous communication. SIMBA trials continued on a weekly basis, with very little change in attendance and usage. The table below provides an overview.

| Cycle overview | Description |

Timeframe |

Late 2005 |

Community |

DCCT members |

Local champion |

Stephen Lombard (DCCT) |

Intermediary |

Meryl Glaser (UCT), DCCT staff |

Prototype |

SIMBA v2 |

Coded by |

Sun Tao (UWC), Elroy Julius (UWC) |

Supervised by |

William Tucker (UCT/UWC) |

Technical details |

(Hersh & Tucker, 2005; Julius, 2006; Julius & Tucker, 2005; Sun, 2005) |

SIMBA v2 cycle overview

Plan Action

This cycle's main focus was to fix the bugs in SIMBA v1 and make SIMBA v2 more usable and reliable. We had noted that the long latencies made communication difficult for hearing users by upsetting expected voice conversation rhythms. We planned to leverage IM awareness and presence mechanisms to deal with such macro latency. A simple message on the IM client GUI (graphical user interface) would let the Deaf person know that someone was speaking, and furthermore, that speech was being converted to text. We wanted to provide a similar awareness feature for the hearing user, such that the hearing person would know that text was being typed and/or converted to voice. We would play a tone to let the hearing user know that this was happening. We called this 'audio isTyping'.Another MSc student joined the project. His research topic was to explore guaranteed delivery of semi-synchronous messaging (Julius & Tucker, 2005). The purpose of his study was to demonstrate to the Deaf user that SIMBA could guarantee delivery of messages where SMS could not.

Since we had poor participation during the weekly sessions in the previous cycle, we decided to concentrate on the DCCT staff members who were working at the Bastion. In order to do that, we had to expand the wireless network and put PCs on their office desks.

Implement Action

We identified and fixed many SIMBA bugs, and added a number of features during this cycle: configuration parameters for gateways, dynamic instead of fixed IP addresses, handled a busy signal, enabled the relay operator to terminate a call, parameterised the TTS engine via the SIMBA interface and recovered when the telephone hung up. One particular problem with the relay operator's audio was difficult to fix: the operator heard the outgoing result of the Deaf user's TTS. The most significant feature addition was isTyping for the Deaf user, and audio isTyping for the hearing user (see below). The overall SIMBA architecture remained as depicted above.

SIMBA v2 audio isTyping awareness feature

The audio isTyping feature caused SIMBA to play music for the hearing user when the Deaf user was typing text. SIMBA also provided awareness for the Deaf user. A message was displayed on the Deaf user's IM client when the hearing user was speaking.

An offshoot of SIMBA, built with the Narada brokering facility from Indiana University (www.naradabrokering.org) was implemented as NIMBA (Julius, 2006). NIMBA provided guaranteed delivery of real-time components with forward error correction and of store-and-forward components with Narada.

Several old PCs had been donated to DCCT and we put these in the staff offices. We extended the WiFi network to include staff offices with a second AP with a strong directional antenna (borrowed from our rural telehealth project) to get through the thick brick walls.

Evaluate Action

NIMBA experiments were conducted with several regular attendees, but we had little success getting them to use either NIMBA or SIMBA. It continued to be very difficult to reach hearing co-participants. There was only one instance when a Deaf person asked us to set up a SIMBA call for them. Instead, we were continually asking them to make a SIMBA call. One person noted that if a Deaf person wanted to contact a hearing person, they would just use SMS.One technical problem with SIMBA was that its FOSS TTS engine, FreeTTS, stopped processing text after the first full stop (period) in the Deaf user's message. Thus, when a Deaf user typed a long message with multiple sentences, only the first sentence would be sent to the hearing user. Therefore, we encouraged the Deaf participants to use short single sentences. FreeTTS also did not intonate punctuation like other TTS engines, so the result appeared quite mechanical and synthetic to hearing users.

Reflection/Diagnosis

During focus group sessions, DCCT staff members identified several inhibiting factors regarding the poor take-up of SIMBA. Deaf users could not use the system when we were not there. Firstly, this was due to the continued problems with operating SIMBA. Secondly, SIMBA was closely associated with our presence. Because of the poor take-up, we did not hire a relay operator so one was not always available. The end result was that Deaf users could not use the system any time they wanted.Consent forms also inhibited take-up. Very few people brought in consent forms for hearing users. At first, the Deaf users completely misunderstood that the consent form was supposed to be signed and brought back because they had had little experience with research and research protocol. They often brought back the written project information sheet instead. They also had difficulty understanding the text. We made graphical sketches of the system, and then put large posters in the Bastion (see below). Those helped explain the project to the Deaf users better than the written text.

Visual information sheet for the SIMBA system

The Deaf users had difficulty with the written information sheet for the project. We drew this graphical depiction of the system for them, and also placed a large poster in the PC room at the Bastion. We would write subsequent information sheets (for other prototypes) in point form to make it easier for an interpreter to translate into SASL.

Our operation hours were also awkward. They may have been convenient for the Deaf users, but were not convenient for the hearing users. Thursday evening sessions were problematic because many hearing co-participants were Muslim, and did not want to be disturbed at dusk during prayer time. The other time slot was Friday morning when hearing participants were working.

Some significant non-research events also occurred during this cycle. The PC lab assistant left to have a child and was replaced by someone else who became difficult and ceased working for the project. Thus, we realised we should employ more than one assistant in case we had similar problems in the future. Our initial intermediary resigned her post at UCT and began working for another Deaf NGO called SLED (Sign Language Education and Development, www.sled.org.za). However, she remained active with DCCT, and with our project.

Most significantly during this cycle, DCCT said they would budget for ADSL the following year. This demonstrated buy-in to the project, and acknowledged the importance of having the computers at the Bastion. We thus came to view DCCT also as intermediaries.

We learned that the Deaf people in this community were not accustomed to calling or sending an SMS to 'any sector'. They were used to communicating within their tight knit Deaf circle, and felt cut off from other sectors. When asked what they wanted from a SIMBA-like system, some of the Deaf people replied that they wanted to use SASL instead of text and one recommended access to SIMBA with SMS in addition to IM. Since we were still trying to automate as much of the system as possible, we opted to follow up on the latter request.

SIMBA v3

A third version of SIMBA provided an SMS interface to the Deaf user. SIMBA v3 trials continued on a weekly basis, yet also failed to attract users. However, the project saw significantly increased use of the PCs in the Bastion. The table below provides an overview.

| Cycle overview | Description |

Timeframe |

Early 2006 |

Community |

DCCT members |

Local champion |

Stephen Lombard (DCCT) |

Intermediary |

Meryl Glaser (SLED), DCCT staff |

Prototype |

SIMBA v3 |

Coded by |

Sun Tao (UWC) |

Supervised by |

William Tucker (UCT/UWC) |

Technical details |

None published |

SIMBA v3 cycle overview

Plan Action

A brief overview of the technical design of the SMS interface is presented in the transaction diagrams below. SIMBA v3 was particularly interesting in that it involved bridging at all seven Softbridge stack layers (see below).Implement Action

An SMS agent was integrated into a SIMBA client. The SIMBA server was not changed. The SMS agent used a GPRS card to send/receive an SMS. To initiate a SIMBA call, the Deaf user sent a specially formatted SMS to the SMS agent. From then on, the user sent and received SMS as normal, with SIMBA performing relay to a telephone.

SIMBA v3 Softbridge stack

SIMBA v3 was particularly interesting because it involved bridging at all seven Softbridge layers. The SMS interface for the Deaf user entailed differences in power provision, network access, end-user devices, text and voice media, synchronous and asynchronous temporalities, user interfaces and of course between Deaf and hearing people.

SMS interface to SIMBA v3

This diagram shows the sequential flow required to add the SMS interface to SIMBA. The arcs are defined below.

1 SMS user sends an SMS to SMS Agent. The message looks like "*0722032817* how are you?". The content between the asterisks is the called user's number or name. 2 SMS Agent extracts the message from SMS and formats a SIP IM for the SIMBA Server. The SIP IM looks like "sip: 0722032817@Softbridge.org". 3 SIMBA Server receives the message and sets up a SIP call to the called user, either via a PSTN Gateway for a telephone/cell phone or directly if it is an IP-based user. 4 Called user answers the call and a connection is set up. 5 SIMBA Server sends the IM message text to Media Adapter. 6 Media Adapter converts text to voice. 7 Media Adapter sends voice stream to called user. 8 Voice stream is sent from called user to Media Adapter. 9 Media Adapter converts voice to text via Relay Operator. 10 Media Adapter packs text to SIP IM and sends it to SMS Agent via SIMBA Server 11 SMS receives SIP IM, gets text message, packs to SMS and sends to a Deaf user. |

Flow diagram for SMS interface to SIMBA v3

Evaluate Action

Experimentation with SIMBA v3 occurred as in earlier cycles, in twice-weekly research sessions. Participation continued to be sparse, despite increased daily attendance for the open lab. As expected, the SMS interface exhibited long latencies due to 'tap' time. Unfortunately, take up did not improve and we conceded that SIMBA was not going to be taken up as a service by this community.Reflection

The SMS interface and isTyping features were not enough to boost SIMBA usage. In hindsight, both were technically interesting ideas, but added little value for potential users. We came to realise that addressing perceived problems with yet more technical bells and whistles was not going to improve take-up. The problems were deeper, and more social in nature. We were told by DCCT leaders that perhaps the Deaf community was so close knit that the members really had no desire to 'talk' to people outside the community. They were more interested in ICT that enabled them to communicate with one another, like SMS, email, IM and especially video conferencing. This was clearly evident by attendance during the week at the PC lab. With two lab assistants, the open days for the lab were increased to six days/week, and overall attendance increased dramatically. While technical research with SIMBA was floundering, the lab was being used in record numbers two years after we had introduced the PCs.Related to the sentiment above that our technical concerns were overshadowed by the social factors, we contemplated the efficacy of the informed participation approach as described by Hersh and Tucker (2005). At the time, our main concern was to explore QoC and learn how macro latencies could be overcome to provide a still useable communication platform. We quite openly discussed delay issues with participants. It might have been that they may were confused about the purpose of the project: was it PCs, Deaf telephony, or delay?

With a full-time programmer on the project, we were able to more quickly address bugs and enhancements. Unfortunately, the lead programmer immigrated to Canada and we replaced him with another MSc graduate.

DeafChat

We next built a real-time text chat system that sent characters to chat participants as they were typed instead of waiting for the terminating new-line. DeafChat proved to be very popular during the weekly research sessions. The Deaf users actively participated in the design of the tool by offering feedback. Since the programmer was not a student, we were able to react quickly to their suggestions. The table below provides an overview.

| Cycle overview | Description |

Timeframe |

Mid-late 2006 |

Community |

DCCT members |

Local champion |

Stephen Lombard (DCCT) |

Intermediary |

Meryl Glaser (SLED), DCCT staff |

Prototype |

DeafChat |

Coded by |

Yanhao Wu (UWC) |

Supervised by |

William Tucker (UCT/UWC) |

Technical details |

None published |

DeafChat cycle overview

Diagnosis

It appeared that the Deaf people were more interested in communicating with each other than with hearing people. We had introduced them to IM systems like MSN, Skype and AIM, but rarely saw them using those tools. Many of the Deaf participants had prior experience with the Teldem, even if they did not own one or use one. We reasoned that a real-time text chat tool, similar to the Teldem in synchrony, might appeal to Deaf people.Plan Action

We designed a real-time text tool that transmitted characters in real-time, similar to the Teldem. Unlike the Teldem, however, this tool would support multiple participants, have a PC GUI interface and identify users. IM clients typically transmitted in 'page mode', meaning that text was transmitted in chunks defined by the user hitting the Enter key (new-line). The Teldem operated in 'session mode', sending one character at a time. Thus, the plan was to imbue an IM client with Teldem-like synchrony since we could not add IM functionality to the Teldem.Implement Action

We built two versions of DeafChat, both of which were client-server in nature. The first version was built with SIP, using the MESSAGE mechanism to pass one character at a time to the server that would then broadcast the characters to all connected clients. The clients managed the screen, relegating the character to the appropriate position. A sample interface is shown below. Based on user feedback, we colour coded users' text and improved the interface. We subsequently moved the entire application to a Java-coded web-based client to remove the necessity of having to install and upgrade software.

DeafChat interface

The standalone SIP implementation of DeafChat had implicit isTyping. As a user typed, characters were sent to all other chat participants and positioned according to that user's current message. After pressing Enter, a 'GA' token appeared to indicate: "I am finished typing now". GA was a holdover from the Teldem. Multiple users could type simultaneously. Any characters typed in a local input box appeared both in the local output box and any connected client's output box in the correct position in real-time.

Evaluate Action

DeafChat was well received by the regular research session participants in a way we had not experienced before. During this cycle, the whole group frequently used DeafChat during the research sessions. When new people came in, they often asked to get on it, too. The tool was also used by the weekly literacy course. Sometimes, they would already be involved in a group chat before we arrived. They also did not mind us participating in their chats, which mostly consisted of poking fun at one another, and at us. DeafChat was rather basic. Login was not authenticated and the application was limited to the local area network, but these issues did not matter to the Deaf users.Reflection

There were several innovative technical features of the tool. At the network layer, the tool appropriated SIP messages to deliver text characters in real-time. SIP was designed for real-time voice and video (Handley et al., 1999), and only provided for asynchronous text with SIMPLE (Campbell et al., 2002). At the interface layer, DeafChat provided isTyping awareness implicitly. A user could always tell what the other chat participants were doing (or typing) based on a quick visual scan of the screen. DeafChat was deployed on PCs at the Bastion, yet the web-based client interface made it possible to port the DeafChat to cell phones. However, that would still require a GPRS, 3G or WiFi data connection.After struggling for so long with the SIMBA prototypes, we were pleasantly surprised by the instant popularity of DeafChat. However, that popularity was short-lived. After the novelty wore off and Yanhao Wu (the programmer) left the project, DeafChat retired into the same disuse that befell prior prototypes. In spite of that, there were several instructive explanations for its brief success. Unlike SIMBA, DeafChat was fundamentally a Deaf-to-Deaf tool. DeafChat was modelled on Teldem-like modalities familiar to the participants, and enabled them to feel comfortable with textual content amongst themselves, much as with SMS. It was notable that the participants were also comfortable with the researchers participating in the chats. The participants had acquired a solid base of computer literacy via the previous prototype trials. In addition, most of them were also participants in an on-going English literacy course that was co-scheduled with the research visits. Thus, the Deaf participants felt more confident using both ICT and English text. Most importantly, participants used DeafChat with each other because they all had the same degree of technology access in the lab during the research sessions. This also explained why DeafChat could not achieve larger penetration into the Deaf community; a user had to be physically in the lab to use it.When the circle was closed and small, DeafChat usage was encouraging. Yet outside the circle, potential users were sidelined by lack of access to technology.

DeafVideoChat

We exposed Deaf users to several off-the-shelf video IM tools to respond to the need for SASL communication. None of the common IM video tools appeared to support sign language conversation with webcams, even relatively high-end webcams with large amounts of P2P bandwidth on a local network. Thus, we conducted a preliminary investigation into asynchronous video in 2006 with an Honours (4th year) project. We continued the project in 2007-8 as an MSc project. We also began to explore an innovative gesture recognition interface to the asynchronous video prototype. The table below provides an overview of the cycle.

| Cycle overview | Description |

Timeframe |

2006-2008 |

Community |

DCCT, SLED |

Local champion |

Richard Pelton (DCCT) |

Intermediary |

Meryl Glaser (SLED), DCCT staff |

Prototype |

DeafVideoChat |

Coded by |

Zhenyu Ma (UWC), Russel Joffe (UCT), Tshifhiwa Ramuhaheli (UCT) |

Supervised by |

William Tucker (UCT/UWC), Edwin Blake (UCT) |

Technical details |

(Ma & Tucker, 2007, 2008) |

DeafVideoChat cycle overview

Diagnosis

A primary need expressed by Deaf participants was to communicate in SASL. We had temporary success with DeafChat, but it did not support video. We reasoned that we should abandon the 'do-it-yourself' approach characterised by the SIMBA cycles and expose the Deaf users to off-the-shelf video tools in order to learn about their advantages and disadvantages.Plan Action

We would expose the Deaf participants to off-the-shelf free video tools available online. The aim was to familiarise them with what was available, encourage them to use the tools and to learn how they performed with respect to Deaf needs. While participants were exploring synchronous video, we would also investigate asynchronous video to provide higher quality.Implement Action

DCCT participants dismissed Skype and other common video chat tools because of poor video quality for sign language. The tools appeared to be designed for hearing users, prioritising voice over video quality. Thus, sign language communication was blurry and jerky. This prompted us to implement an asynchronous video tool, herein called DeafVideoChat. It was a simple tool with two video windows as shown below. The outgoing recorded video could be replayed to check for correctness, and the incoming video could also be replayed. Exploratory experiments were carried out with various compression techniques, and also with several transmission techniques in order to determine an optimal combination (Ma & Tucker, 2007). Further experimentation at an MSc level resulted in the integration and optimal configuration of the x264 codec into the system (Ma & Tucker, 2008). We also began work on a gesture recognition interface. This should not be confused with sign language recognition. The point of the gesture recognition interface was to provide gestured control of the asynchronous video tool, e.g. to start or stop recording.We upgraded the webcams in DCCT twice over the course of this cycle. After frustration with tools like MSN and Skype, some DCCT users started using Camfrog (www.camfrog.com) as their preferred video tool for remote sign language communication. We bought Camfrog Pro licenses to enable the use of full screen video and some other features. Some DCCT users took advantage of the social networking that Camfrog offered for Deaf communities around the world. Camfrog's lack of privacy controls made it cumbersome for users who did not enjoy open access from the global Internet community. DCCT users also used the tools to chat to the SLED NGO, and the researchers.

Evaluate Action

Iterative revisions of DeafVideoChat configured the x264 video codec to improve sign language compaction for store-and-forward transmission. One of the Deaf participants reported that it was the first time he had seen clear enough video to understand the sign language. However, Deaf participants did not take to DeafVideoChat as with DeafChat. Firstly, they were more interested in real-time communication. Secondly, the issues regarding the size of the connectivity circle were still relevant. No Deaf people in the potential connectivity circle physically outside the Bastion could communicate with the tool.

DeafVideoChat interface

The Capture window on the left was for user1 to capture a SASL video message to send to user2. The Playing window on the right was for user1 to play and replay the last SASL message received from user2. Information messages were displayed in English in the bottom right-hand corner.

On the real-time front, even though Camfrog Pro enabled full screen video, the Deaf users chose a smaller screen size in order to increase video quality. We noted that several frequent Camfrog users with DCCT tended to use Camfrog for its community features. Meanwhile, we were aware that SLED (another Deaf NGO nearby) actively used Camfrog to conduct its daily business because they had two offices, one in Cape Town and one in Johannesburg. SLED users had deemed Camfrog to offer superior quality to Skype.

A prototype of the gesture recognition interface was shown to Deaf users with favourable responses. Unfortunately, the gesture recognition interface project was temporarily halted then restarted as the responsibility passed from one MSc to another.

Reflection

DeafVideoChat clearly offered superior video quality with respect to sign language comprehension. Still, Deaf users who actually used a remote video tool rather chose Camfrog. It should be noted that very few of the participants used a video communication tool outside the research sessions. There were only a couple of regular DCCT video users. SLED, on the other hand, adopted Camfrog as a part of their everyday business conduct.There were several explanations why regular users preferred the lower quality tool, and why so few DCCT participants used the tool.Camfrog was synchronous and was therefore easier to use than the asynchronous interface that required numerous button presses to record, receive and send video. Camfrog also had the advantage of being clearly associated with Deaf users on an international basis. Camfrog provided established chat rooms for users from all over the world. Deaf users related to Camfrog as a SASL tool whereas Skype was a text tool even though it also supported video. DeafVideoChat, on the other hand, was a research tool clearly associated with our weekly visits. Camfrog's perceived superior quality may have been linked to these social issues rather than purely technical ones. We cannot say for sure because we could not perform automated objective video quality tests on Camfrog or Skype as we could with DeafVideoChat because the internals of the web tools were not accessible via open source. On a related issue of users adopting a lesser quality tool, Camfrog had many Skype features with respect to media and temporal modalities, but was much less sophisticated in terms of security and privacy. This lack of features, however, did not deter users from adopting Camfrog.

SLED users appropriated tools like Camfrog (for SASL) and Skype (for text) into their daily activities more than DCCT users. This could be explained by the fact that the two SLED offices needed to communicate with each other because of geographic distance. Furthermore, end-users at both SLED offices had very similar attributes, e.g. education, PC literacy, PCs with web cams on their desks and broadband connectivity that encouraged the appropriation of a tool like Camfrog. On the other hand, while most DCCT participants had a PC on their desk at offices throughout the Bastion building, face-to-face contact was preferable to and more convenient than virtual contact. More importantly, DCCT-resident end-users had much more ICT4D access than off-site Deaf and hearing users in their potential connectivity circle, especially with regarding to physical access to ICT. Therefore, the connectivity circle at the Bastion was artificial at best. Even though the Deaf telephony prototypes may have functioned adequately, there was no need or even genuine opportunity for DCCT participants to use the tools, as was the case at SLED. The lack of take up of research prototypes at DCCT and the simultaneous take-up of Skype and Camfrog at SLED demonstrated that the social considerations were fundamentally more important than the technical attributes of any ICT4D system we could devise.